Overview

Falcon LLM is a foundational large language model (LLM) with 40 billion parameters trained on one trillion tokens. TII has now released Falcon LLM – a 40B model.

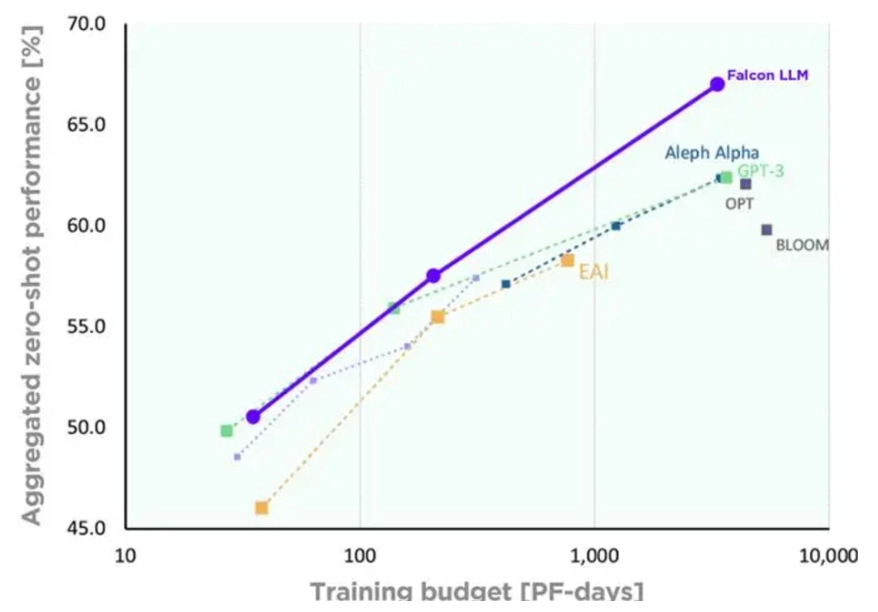

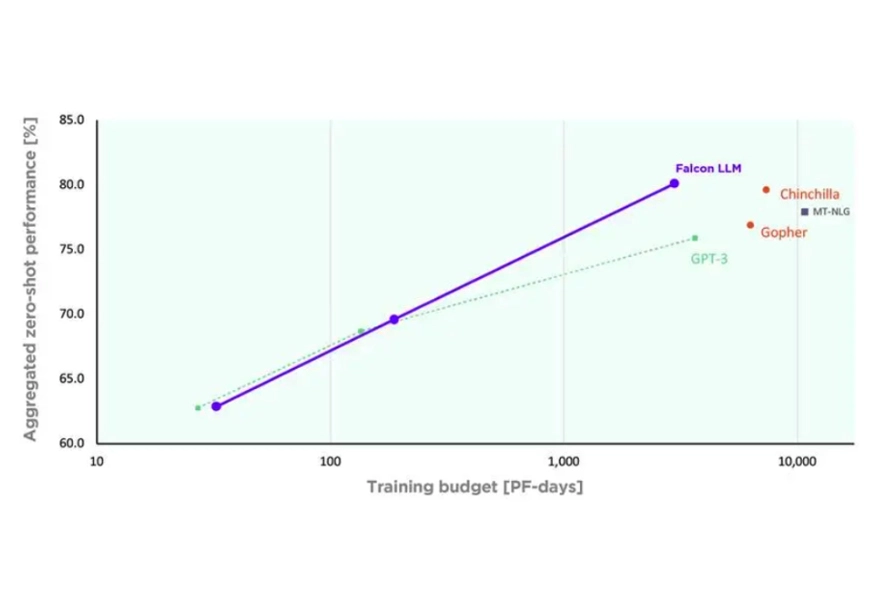

The model uses only 75 percent of GPT-3’s training compute, 40 percent of Chinchilla’s, and 80 percent of PaLM-62B’s.

How was Falcon LLM developed?

- Falcon was built using custom tooling and leverages a unique data pipeline that can extract high-quality content out of web data and use it for training a custom codebase, independent from the works of NVIDIA, Microsoft, or HuggingFace.

- A particular focus was put on data quality at scale. LLMs are notoriously sensitive to the quality of their training data, so significant care was taken in building a data pipeline that would both scale to tens of thousands of CPU cores for fast processing, and that would extract high-quality content from the web using extensive filtering and deduplication.

- The architecture of Falcon was optimized for performance and efficiency. Combining high-quality data with these optimizations, Falcon significantly outperforms GPT-3 for only 75% of the training compute budget—and requires a fifth of the compute at inference time.

- Falcon matches the performance of state-of-the-art LLMs from DeepMind, Google, and Anthropic.

- Falcon is a 40 billion parameters autoregressive decoder-only model trained on 1 trillion tokens. It was trained on 384 GPUs on AWS over the course of two months.

- Pretraining data was collected from public crawls of the web to build the pretraining dataset of Falcon. Using dumps from CommonCrawl, after significant filtering (to remove machine generated text and adult content) and deduplication, a pretraining dataset of nearly five trillion tokens was assembled.

- To broaden Falcon abilities, this dataset was then extended with a few curated sources such as research papers and conversations from social media.

- Finally, Falcon’s performance was validated against open-source benchmarks such as EAI Harness, HELM, and BigBench.

- Generate creative text and solve complex problems.

- Used in chatbots, customer service operations, virtual assistants, language translation, content generation, and sentiment analysis.

- Broad use cases are foreseen by Falcon, although we are most excited about applications to reduce and automate “repetitive” work.

- Falcon will help Emirati companies and startups become more efficient, streamlining internal processes and giving back time for employees to focus on what matters.

- At an individual level, chatbots embedding Falcon will be able to assist users in their daily lives.

Falcon 40B is Open Sourced

Why Open Source?

Open sourcing technology promotes collaboration and drives innovation by allowing a global community of developers to share their expertise and contribute to the growth and enhancement of the software. It also promotes transparency, enabling users to inspect and verify the code for security and reliability. Technology Innovation Institute hopes to advance the knowledge and research in LLMs in a safe and transparent manner leading to more uses of AI for good.

Access Falcon LLM

Access Falcon LLM

Research

Our experts regularly share our work through a range of publications, including books, journal articles, patents, presentations and white papers

Falcon is a new state-of-the-art Large Language Model. Falcon was built from scratch in Abu Dhabi, using custom-built tooling for data pre-processing and model training. Falcon significantly outperforms GPT-3 at a fraction of the cost and matches the performance of similarly sized LLMs from DeepMind (Chinchilla), Google (PaLM-62B), and Anthropic.

Projects

Falcon Scientific Overview

“Falcon Chatbot” Coming soon.

In the news

Stay up-to-date on the latest headlines with our daily news roundup.

UAE's Falcon 40B is now Royalty Free

www.tii.ae

May 31, 2023

«الابتكار التكنولوجي» يشارك نموذج «فالكون 40 بي» بشكل مفتوح المصدر

www.alittihad.ae

26 مايو 2023

معهد الابتكار" يتيح "فالكون 40 بي" مفتوح المصدر لأغراض البحث والاستخدام التجاري

www.wam.ae

25 مايو 2023

Abu Dhabi makes its Falcon 40B AI model open source

www.reuters.com

May 25, 2023

Abu Dhabi makes its large-scale Falcon 40B AI model open source

CGTN

May 25, 2023

Abu Dhabi's TII makes large language model Falcon open source

thenationalnews.com

May 25, 2023

معهد الابتكار التكنولوجي يشارك نموذج "فالكون 40 بي" بشكل مفتوح المصدر لأغراض البحث والاستخدام التجاري

www.zawya.com

25 مايو 2023

Technology Innovation Institute launches Falcon Large Language Model

www.wam.ae

March 16, 2023

'Cheaper and faster' ChatGPT rival being built in Abu Dhabi

www.thenationalnews.com

March 15, 2023

Abu Dhabi-based Technology Innovation Institute introduces Falcon LLM

www.zawya.com

March 16, 2023

UAE's ChatGPT rival launched in Abu Dhabi with 40 billion parameters

www.khaleejtimes.com

March 15, 2023

ابتسام المزروعي: «فالكون».. نموذج إماراتي ينافس «تشات جي بي تي 3»

www.albayan.ae

07 مايو 2023

Can UAE-built Falcon rival global AI models? - Middle East AI News

soundcloud.com

March 16, 2023

TII introduces Falcon LLM with 40bn parameters

www.tradearabia.com

March 15, 2023

معهد الابتكار يطلق النموذج اللغوي «فالكون»

www.alkhaleej.ae

16 مارس 2023

معهد الابتكار التكنولوجي في أبوظبي يطلق النموذج اللغوي “فالكون” بـ 40 مليار عامل متغير

alwahdanews.ae

15 مارس 2023

أبوظبي تطلق منافساً لـ«تشات جي بي تي».. النموذج اللغوي «فالكون»

cnnbusinessarabic.com

15 مارس 2023

منافس ChatGPT “أرخص وأسرع” يتم بناؤه في أبو ظبي

www.barabic.com

15 مارس 2023

معهد الابتكار يطلق النموذج اللغوي «فالكون»

www.msn.com

16 مارس 2023

Abu Dhabi-based Technology Innovation Institute introduces Falcon LLM

www.zawya.com

March 15, 2023

TII introduces Falcon LLM with 40bn parameters

www.tradearabia.com

March 15, 2023

Falcon Big Language Model Is Introduced by the UAE’s Technological Innovation Institute

www.techversions.com

March 15, 2023